Social Media ADR False Positive Rate Calculator

How to Use This Tool

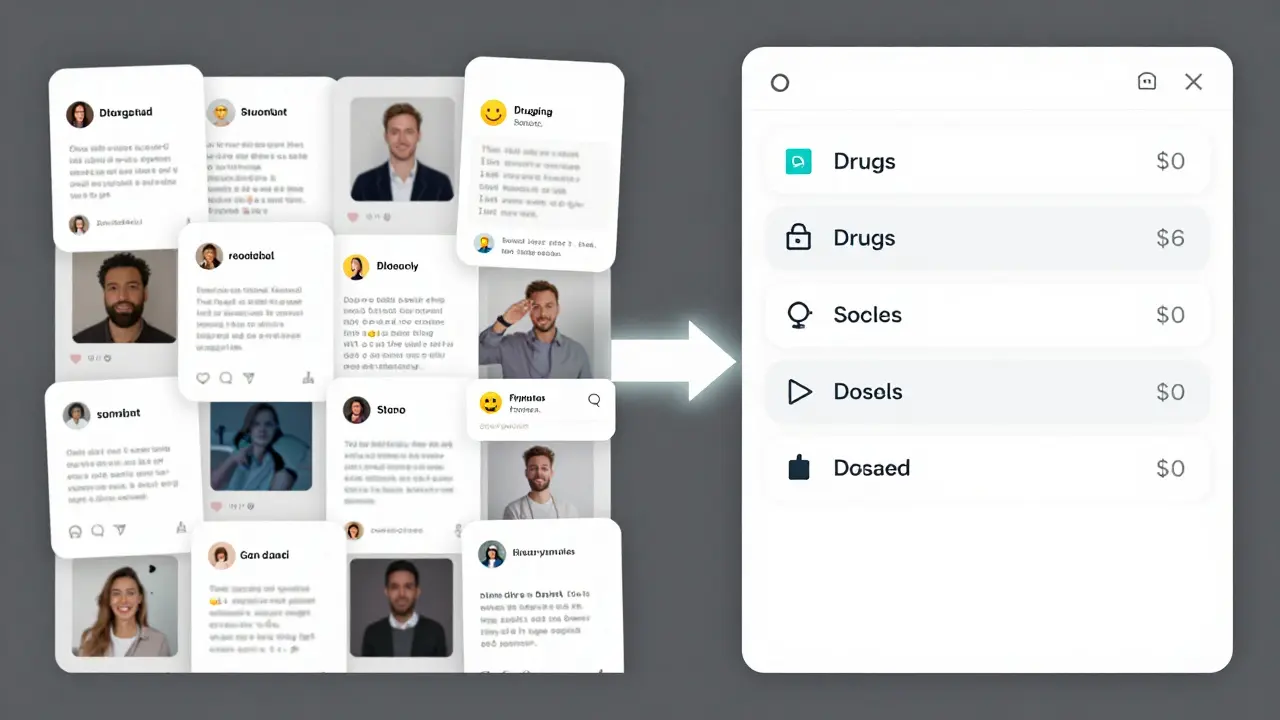

This calculator estimates the expected false positive rate for social media-based pharmacovigilance using data from the article. The article reports that 68% of potential ADR reports on social media turn out to be false. Input your values to see how different factors impact reliability.

Your calculated results:

Every year, millions of people take prescription drugs. Most of them do fine. But for some, things go wrong - unexpected rashes, strange dizziness, heart palpitations, or worse. These are called adverse drug reactions (ADRs). Traditionally, doctors and pharmacists report these to government databases. But here’s the problem: only 5-10% of real ADRs ever make it into those systems. That means 90% of the warning signs are flying under the radar. Now, a new source is stepping in: social media.

Think about it. People don’t go to their doctor when they feel weird after taking a new pill. They go to Reddit. They tweet. They post on Facebook groups. They vent in health forums. And that raw, unfiltered chatter? It’s becoming a goldmine for drug safety teams. Companies like Pfizer, Novartis, and AstraZeneca are now using AI to scan millions of social posts every day, looking for patterns that could mean a drug is dangerous. In 2023, one company caught a rare skin reaction to a new antihistamine just 112 days after launch - thanks to a cluster of posts on Instagram and Twitter. That’s faster than any official report system could have done.

How Social Media Finds Hidden Drug Risks

It’s not just about reading posts. It’s about sorting through noise. Imagine trying to find a needle in a haystack - except the haystack is made of 15,000 posts an hour, written in slang, typos, and half sentences. That’s what pharmacovigilance teams face.

Here’s how they do it:

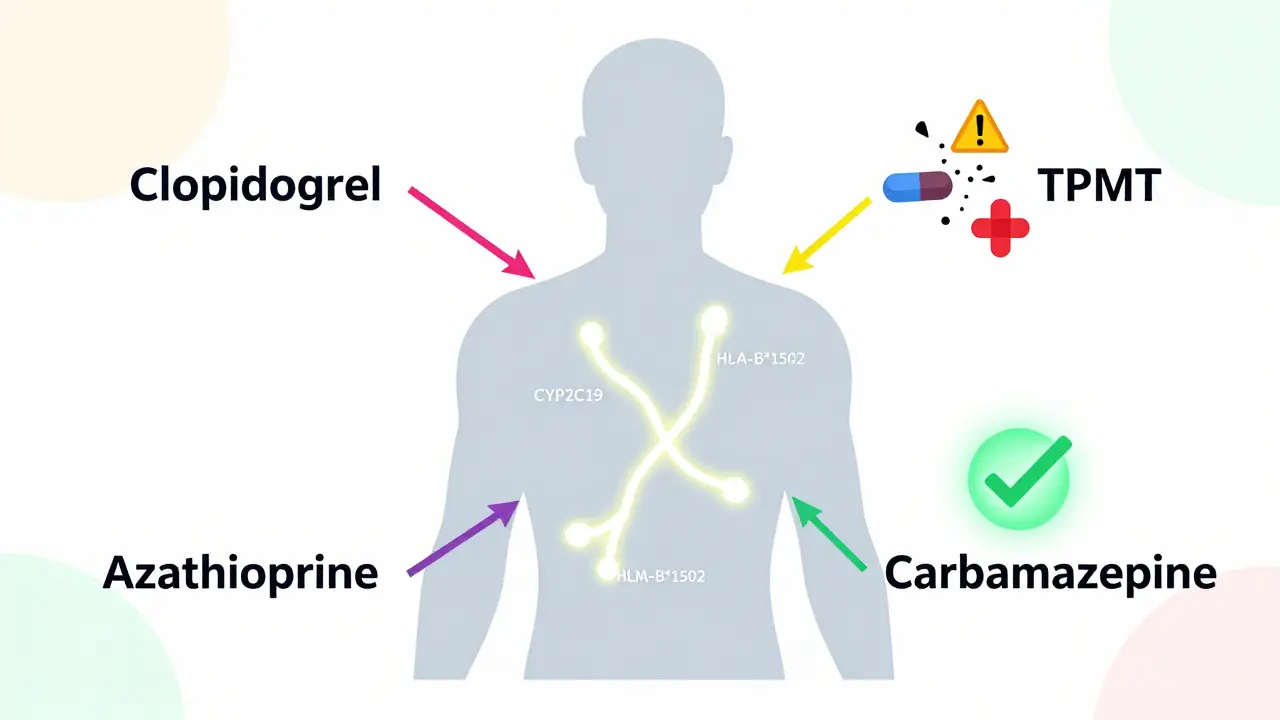

- Named Entity Recognition (NER): AI scans text to pull out key pieces - drug names, symptoms, dosages. If someone writes, “I took 50mg of Zoloft and got a seizure,” the system flags “Zoloft,” “50mg,” and “seizure” as separate data points.

- Topic Modeling: Instead of looking for specific words, this method finds clusters of related language. If dozens of users start talking about “brain fog” after taking a new cholesterol drug, even if they never mention the drug name, the system notices.

- AI Filtering: Modern systems can process posts in 30 languages. They learn to ignore memes, jokes, or people saying “I feel like a zombie” after coffee - not the drug.

By 2024, 73% of big pharma companies were using AI for this. These tools can spot a potential safety signal in real time. One case study showed a new diabetes drug triggered 170 social media reports of low blood sugar within two weeks - but not a single formal report had been filed yet. The drug’s label was updated within six weeks. That’s the power of social media: speed.

The Dark Side: False Alarms and Privacy Nightmares

But here’s the catch: most of what’s posted isn’t real.

Amethys Insights found that 68% of potential ADR reports on social media turn out to be false. People mix up drug names. They blame side effects on the wrong medication. Some exaggerate. Others post about symptoms caused by stress, alcohol, or a bad night’s sleep. In one case, a woman wrote, “My new pill gave me wings.” The AI flagged it. A human had to step in - and laugh.

And then there’s the data gap. Over 90% of social posts lack critical info:

- 92% don’t mention the patient’s age, weight, or medical history.

- 87% don’t say how much of the drug they took.

- 100% can’t be verified - you have no idea if the person is real, if they’re lying, or if they even took the drug.

Worse, some drugs are invisible on social media. If a medication is prescribed to fewer than 10,000 people a year - say, a rare cancer drug - there simply aren’t enough posts to find a signal. The FDA found a 97% false positive rate for those drugs. Social media works best for big, widely used drugs. For everything else? It’s useless.

And privacy? Huge issue. Patients post about depression, seizures, or miscarriages - thinking they’re talking to friends. Then, a pharmaceutical company’s AI pulls that data into a database. No consent. No warning. No opt-out. One Reddit user put it bluntly: “I told my story to cope. Now it’s being sold to a drug maker.”

Real Wins - When Social Media Saved Lives

Despite the noise, there are undeniable victories.

Take the case of a new antidepressant launched in late 2023. Within 47 days, social media users started talking about dangerous interactions with St. John’s Wort - a common herbal supplement. Clinical trials didn’t catch this. Doctors didn’t know. But a nurse on Twitter noticed a pattern. Her post went viral. Pharmacovigilance teams picked it up. Within 80 days, the FDA added a black box warning to the label.

Venus Remedies, a mid-sized pharma company, used social media to spot a rare skin reaction to a new antihistamine. Patients described “red, burning patches” after taking the pill. The company traced 22 similar posts across three platforms. They confirmed it with hospital records. The drug’s label was updated - 112 days faster than the traditional system. That’s not just efficiency. That’s life-saving.

And it’s not just big companies. A 2024 survey found that 43% of pharmaceutical firms have detected at least one major safety signal from social media in the last two years. That’s a game-changer.

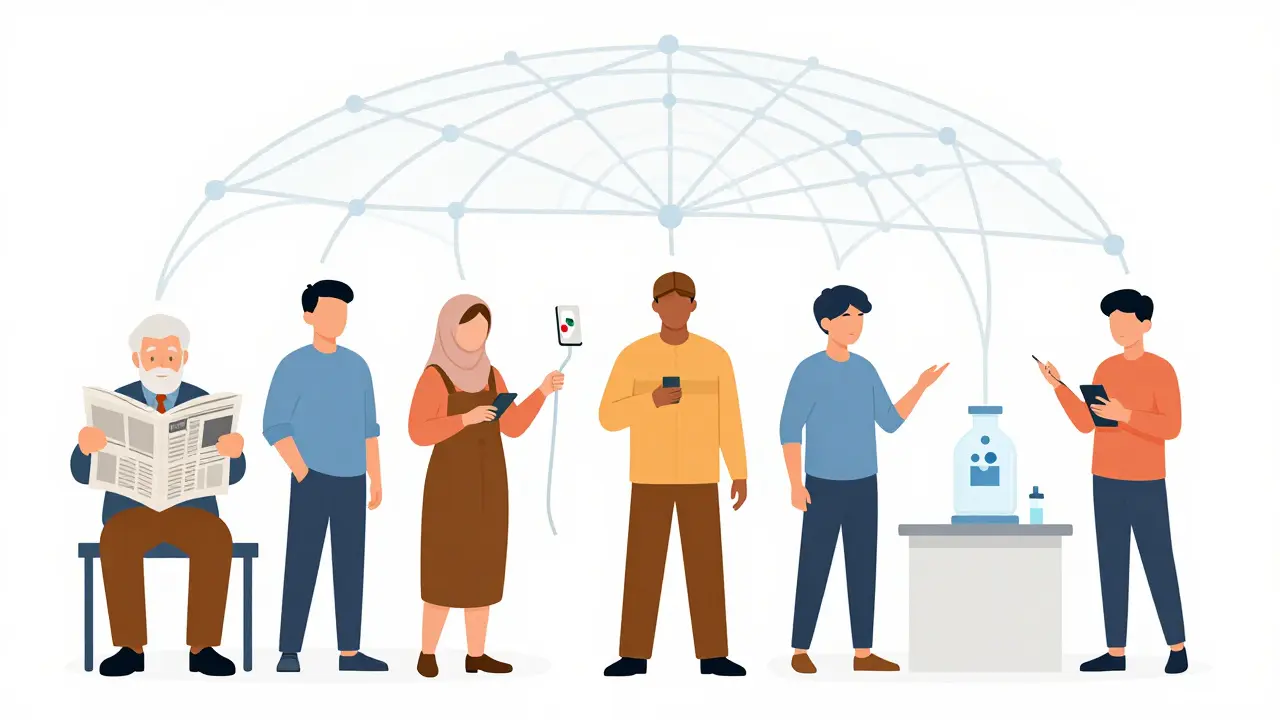

Who’s Left Out? The Silent Majority

Here’s something no one talks about: not everyone is on social media.

Older adults? Many don’t use Twitter or TikTok. Low-income communities? They might not have smartphones or reliable internet. People in rural areas? Their networks are small, and their health concerns stay private. And in countries with strict internet censorship? Forget it.

This creates a dangerous bias. Social media pharmacovigilance only sees the voices of the connected. That means we might miss risks that affect vulnerable groups - people who rely on older, cheaper drugs, or those who can’t afford to see a specialist. Dr. Elena Rodriguez warned in 2023: “If we only listen to the people online, we’re designing safety systems for the privileged - not the public.”

The Future: AI, Regulation, and Trust

Regulators are catching up. In 2022, the FDA said social media data could be used - but only if it’s validated. In 2024, the EMA made it mandatory: companies must now document their social media monitoring methods in every safety report.

The FDA’s new pilot program, launched in March 2024, is testing AI tools that cut false positives below 15%. That’s ambitious. Current systems hover around 30-40%. If they succeed, this could become standard.

But trust is the biggest hurdle. Patients won’t share health details if they think it’s being harvested. Companies won’t use the data if regulators don’t accept it. And regulators won’t trust it if the data is messy.

The solution? Transparency. Clear rules. Opt-in systems. Maybe one day, when you download a new medication app, you’ll see a checkbox: “Allow us to anonymously monitor public posts about this drug for safety research.” If enough people say yes, the system works. If they say no? It collapses.

Right now, social media pharmacovigilance is like a powerful but uncalibrated tool. It can save lives - or cause panic. It can expose hidden dangers - or distract from real ones. The tech is here. The data is flowing. But the rules? They’re still being written.

What Comes Next?

The market for this tech is exploding. It’s expected to grow from $287 million in 2023 to $892 million by 2028. Europe leads adoption. Asia lags behind - partly because of strict privacy laws. The U.S. is in the middle.

But growth doesn’t mean success. True progress will come when:

- AI filters out noise better than humans

- Patients know their data is being used ethically

- Regulators accept social media reports as legitimate evidence

- Pharmaceutical companies stop treating it as a PR tool and start treating it as a safety system

For now, it’s a supplement - not a replacement. Traditional reporting still matters. Doctors still matter. But social media? It’s no longer a curiosity. It’s a necessary layer in the safety net. And if we get it right, it could prevent thousands of avoidable injuries. If we get it wrong? We’ll be chasing ghosts - and missing the real dangers.